- The Deep View

- Posts

- Anthropic rewrites the book on constitutional AI

Anthropic rewrites the book on constitutional AI

Welcome back. AI toys aren’t all fun and games. A recent report from advocacy group Common Sense Media published Thursday finds that AI-powered toys can be risky to young children. The report, which tested three popular AI-enabled toys on the market, asserts that these toys collect extensive data on children, risk emotional dependency due to engagement-focused design, and often spewed out inappropriate outputs due to a breakdown in safety guardrails. Though these gadgets may look cute, AI is still AI at the end of the day. — Nat Rubio-Licht

1. Anthropic rewrites the book on constitutional AI

2. OpenAI names AI’s biggest adoption problem

3. AI apps are eating the smartphone

GOVERNANCE

Anthropic rewrites the book on constitutional AI

Anthropic has recast the future of Claude by rewriting the founding document that enables Claude to understand itself, and all of us to understand how Claude is supposed to make its decisions.

On Wednesday, the AI firm released a new “constitution” for its flagship chatbot, detailing the values and vision that Anthropic espouses for its models’ behavior. This updates the version released in 2023. The new 25,000-word manifesto has been released under a Creative Commons license and is organized around four main principles: That its models are broadly safe, ethical, and compliant with Anthropic’s guidelines, and genuinely helpful.

While the constitution is written “with Claude as its primary audience,” it gives users a better understanding of the chatbot’s place in society. To sum it up:

Anthropic notes that Claude should not supersede a human’s ability to correct and control its values or behavior, preventing these models from taking harmful actions based on “mistaken beliefs, flaws in their values, or limited understanding of context.”

The company also aims for Claude to be “a good, wise, and virtuous agent,” handling sensitive and nuanced decisions with good judgment and honesty.

The document outlines that Anthropic may also give Claude supplementary instructions on how to handle tricky queries, specifically citing medical advice, cybersecurity requests, jailbreaking information and tool integrations.

And, of course, Anthropic wants Claude to actually be useful. The company said that it wants Claude to be a “brilliant friend” who knows pretty much anything and “treat users like intelligent adults capable of deciding what is good for them.”

Notably, this constitution also expresses skepticism about the idea that Claude could have any semblance of consciousness, either now or in the future, noting that the chatbot’s moral status and welfare are “deeply uncertain.” These are questions that Anthropic will continue to monitor in an effort to heed Claude’s “psychological security, sense of self, and well-being,” it said.

The constitution arrives as tech executives flock to Davos, Switzerland, for the annual World Economic Forum, where AI’s impact on society is being discussed ad nauseam. Anthropic’s CEO, Dario Amodei, claimed in an interview at the conference on Tuesday that AI could be the catalyst for significant economic growth, but warned that the wealth might not be distributed equally.

He painted a picture of a “nightmare” scenario in which unchecked AI could leave millions of people behind, urging governments to help navigate the potential displacement. “I don’t think there’s an awareness at all of what is coming here and the magnitude of it,” he told Wall Street Journal Editor in Chief Emma Tucker.

With competitors like OpenAI and xAI regularly dragged into various kerfuffles, Anthropic is keen to stay on mission and maintain its image. With the new constitution and the company’s leaders constantly evangelizing AI safety, ethics, and responsibility, Anthropic wants to make clear to customers that it’s the moral, upstanding model provider they can trust. Though this may read as virtue signalling, these ideas have been core to Anthropic’s mission from the jump. And because risk-averse enterprises are crazy about Claude, being the good guy appears to have paid off so far.

TOGETHER WITH METICULOUS

Tests Are Dead, Meet Meticulous

Companies like Dropbox, Notion and LaunchDarkly have found a new testing paradigm - and they can't imagine working without it. Built by ex-Palantir engineers, Meticulous autonomously creates a continuously evolving suite of E2E UI tests that delivers near-exhaustive coverage with zero developer effort - impossible to deliver by any other means.

It works like magic in the background:

✅ Near-exhaustive coverage on every test run

✅ No test creation

✅ No maintenance (seriously)

✅ Zero flakes (built on a deterministic browser)

RESEARCH

OpenAI names AI’s biggest adoption problem

While new AI features, products, and models are launched every day, it may feel like the impact AI has on your everyday life isn't changing dramatically. OpenAI has coined a term for that disconnect, the "capability overhang," and understanding it may be a key to unlocking more AI value.

In a blog post titled “AI for self empowerment,” the AI lab defines the capability overhang as “the gap between what AI systems can now do and the value most people, businesses, and countries are actually capturing from them at scale.”

However, OpenAI said that accurately evaluating the capability overhang is challenging because helpful AI use cases can’t often be predicted, citing ChatGPT's early days as an example. Even though it wasn’t capable of doing much more than functioning as a question-and-answer engine, people found creative ways to apply it in their everyday lives. That challenge has only been exacerbated now that frontier AI models can take action and reason on much more challenging tasks, leaving OpenAI unable to predict exactly how it will be integrated into people’s lives.

As a result, the company says its people are using the tools that will actually help identify new use cases and better understand the capability overhang. Some of the company's predictions of the high-level outcomes include double-digit GDP growth, but it will require managing the capability overhang, which OpenAI says can be done in three ways:

Providing people with credible data that clearly explains what is going on in the AI space.

Working to ensure all people get equitable access to AI.

Building tools that users can customize and access to lead to self-empowerment.

There are many bold claims made about AI on a daily basis, whether it's about AI delivering unprecedented value to companies via automation, decimating jobs, or replacing the value and quality of creative work with AI-generated slop. OpenAI's concept of "capability overhang" is a reminder that there is a lot about AI’s impact that can't be predicted, for better and worse. It also offers a good challenge for AI model and tool developers to focus on when launching new products: creating products that anticipate practical solutions in people’s lives.

TOGETHER WITH GRANOLA

Stop Forgetting What You Agreed To Do

Back-to-back meetings kill follow-through. You leave the call, jump to the next one, and suddenly you can't remember who owns what.

Granola fixes this:

Take quick notes during calls – bullets, fragments, whatever works

AI transcribes and cleans everything up – turns your mess into clear summaries with action items

Share or search your notes – chat with them to find what you need without re-reading

No more "wait, what did we decide?" moments. No more dropped balls. Just clarity and actual follow-through.

PRODUCTS

AI apps are eating the smartphone

As demand for AI tools exploded in recent years, companies began launching mobile app versions of these tools — and a new study shows that demand for AI apps has skyrocketed, surpassing entertainment apps.

The 2026 State of Mobile report by market intelligence firm Sensor Tower found that in 2025, generative AI dominated on mobile, growing from 213M downloads in 2022 to 3.8B, an 18-fold increase. Year-over-year, downloads more than doubled, rising 148%.

As a result, generative AI ranked as the second-largest app subgenre by downloads in 2025, behind only social media. It outpaced well-established subgenres such as streaming and everyday practical applications, including customization, file management, antivirus, and more.

Furthermore, major categories such as social media, shopping, streaming and music podcasts saw YoY declines, which the report found is likely because the leading subgenres are longstanding and leave little room for new entrants. Of course, generative AI has the opposite effect, with new players constantly entering and rapid advancements in the space making the apps increasingly appealing.

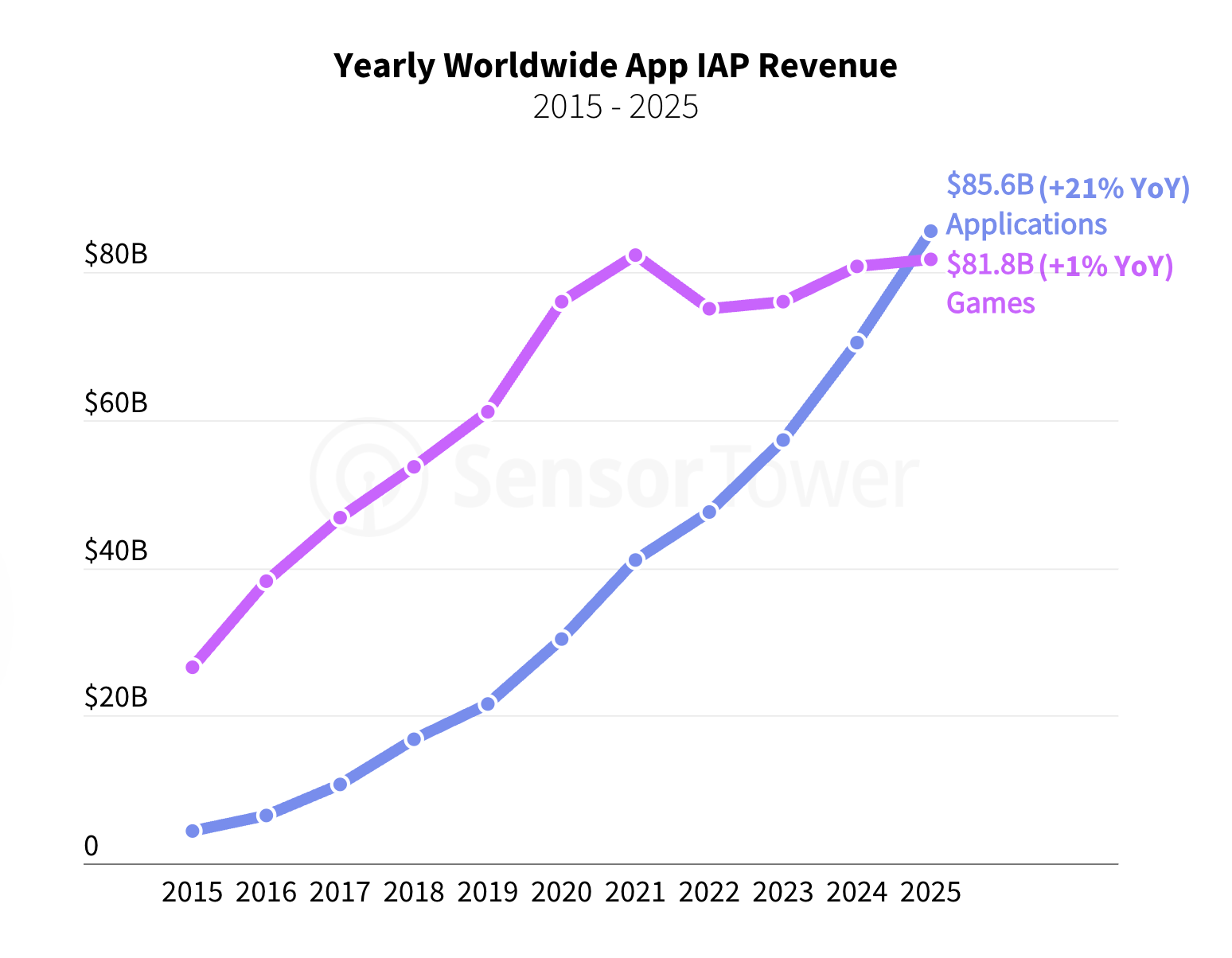

Spending in the generative AI app category saw a similar pattern as downloads, with in-app purchases (IAP) tripling YoY to exceed $5 billion. Notably, this bolstered the non-game applications category to $85.6B in IAP revenue, lapping games, which have typically led IAP revenue, as seen in the graph below.

People aren’t just making a statement with their money, but also with their time. In 2025, time spent in generative AI apps reached 48 billion hours, reflecting 3.6x growth since 2024 and 10x growth since 2023.

The biggest winner among AI assistants is ChatGPT, which became one of the three apps surpassing $1 billion in global IAP for the first time in 2025, behind TikTok and Google One, and also the app with the most downloads in the category.

The exponential increase in generative AI app downloads is noteworthy, signaling that people are not only embracing AI assistants but also becoming more dependent on them, as evidenced by the need to access them on the go. It's also interesting that AI assistants lead the category by a wide margin over AI content creation tools. Again, this shows that people are leaning on AI to help with practical everyday tasks rather than the novelty of generating AI images and videos (a.k.a. "AI slop").

LINKS

AI medical firm OpenEvidence raises $250 million at $12 billion valuation

Sam Altman meets with investors in Middle East for $50 billion funding talks

ElevenLabs launches co-created songs with Liza Minnelli, Art Garfunkel

OpenAI launches initiative to bring AI into education systems internationally

CPU firm AheadComputing raises $30 million in early-stage funding

Networking company Upscale AI raises $200 million at $1 billion valuation

Google Stitch: Generate user interfaces on the fly and pull code directly from your developer interface, now with its own MCP server

Claude Health: Claude can connect to your health data using four new connectors: Apple Health (iOS), Health Connect (Android), HealthEx, and Function Health.

Adobe Express and Acrobat Upgrades: Adobe announced a host of AI-powered features for its flagship suite, including generative powerpoints and podcasts.

Blink Agent Builder: Build agents, websites, apps and software in minutes just using chat.

Oracle: Senior Research Engineer

Meta: AI Engineer, Reality Labs Foundation

Ensense AI: Founding Machine Learning Engineer

Tesla: Sr. Machine Learning Engineer, Network Engineering

A QUICK POLL BEFORE YOU GO

Do you regularly use an AI chatbot app on your phone? |

The Deep View is written by Nat Rubio-Licht, Sabrina Ortiz, Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

“It's getting down to 'gut level' reaction. I can't really say why I chose [this image]. So 50/50 right/wrong. Doesn't say much for the ‘real world’ going forward.” |

“Too many cars in [this image].” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 750,000+ developers, business leaders and tech enthusiasts, get in touch with us here.