- The Deep View

- Posts

- Grok’s explicit images draw new scrutiny

Grok’s explicit images draw new scrutiny

Welcome back. OpenAI has quietly launched ChatGPT Translate. It supports 28 languages and comes with a bunch of great tips that are already more useful than what you'll find on Google Translate and other sites. However, Google Translate supports over 240 languages and has its 1,000 Languages Initiative to use AI to expand to all the most common languages on the planet. Language translation is clearly one of the strongest and most useful capabilities of LLMs. Over the past year, I already used standard ChatGPT prompts to effectively translate whole documents many times. While there's a lot of dialogue about OpenAI overreaching into too many areas, this one deserves a hat tip. —Jason Hiner

1. Grok’s explicit images draw new scrutiny

2. Google's AI power move turns personal

3. Nvidia EDEN eyes thousands of incurable diseases

POLICY

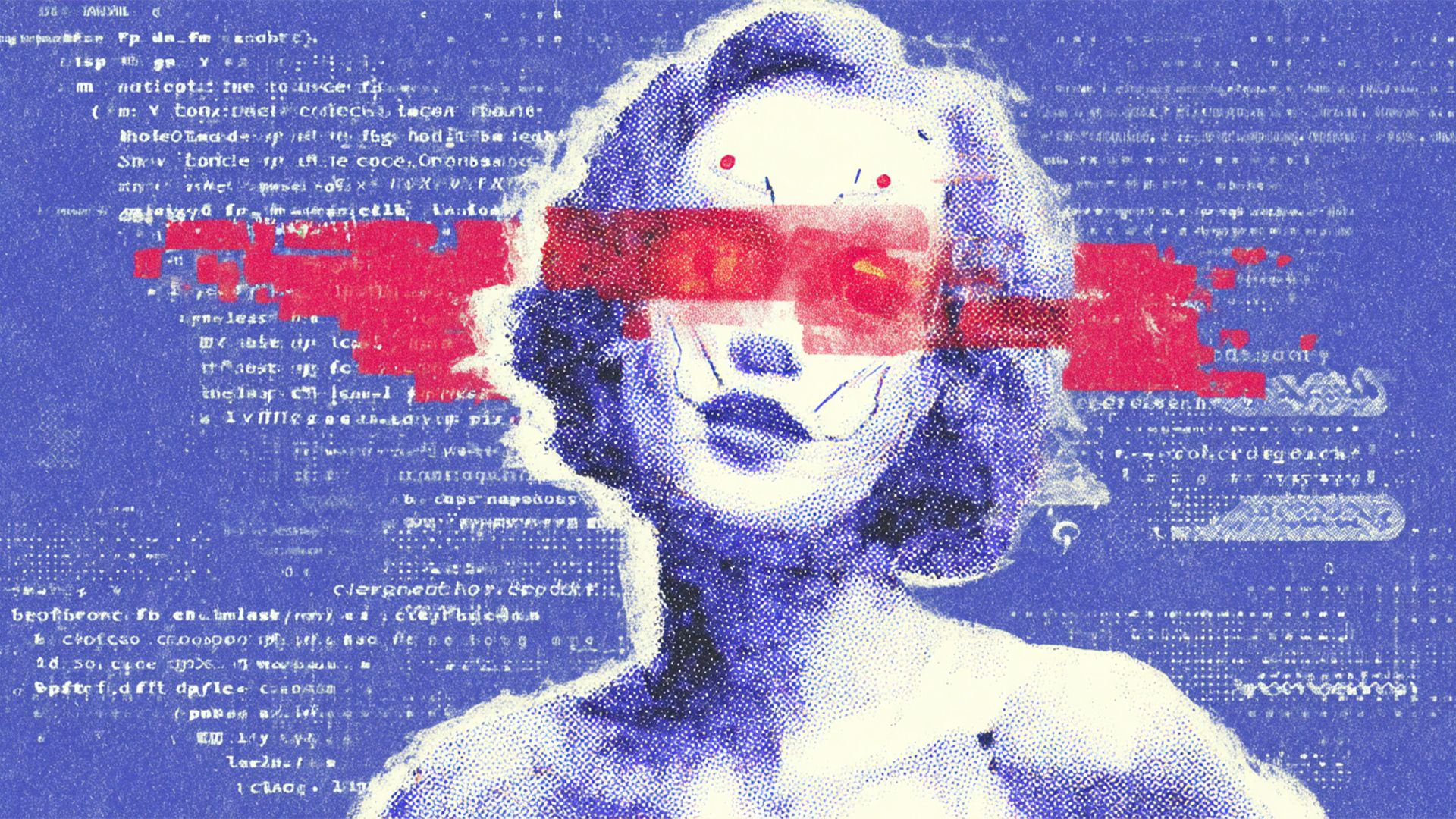

Grok’s explicit images draw new scrutiny

The backlash against Grok for its image generation is reaching a fever pitch.

On Wednesday, California Attorney General Rob Bonta announced that the state department of justice would investigate Elon Musk’s xAI over Grok's production and distribution of AI-generated, nonconsensual sexualized images.

Following an “avalanche of reports” in recent weeks, Bonta urged the firm to take “immediate action” to prevent the further proliferation of these images. “We have zero tolerance for the AI-based creation and dissemination of nonconsensual intimate images or of child sexual abuse material,” he said in a statement.

Gov. Gavin Newsom supported Bonta’s investigation, calling X “a breeding ground for predators to spread nonconsensual sexually explicit AI deepfakes, including images that digitally undress children.”

Earlier this week, the British government also opened an inquiry into the matter for potentially breaking online safety laws and regulations.

Musk, however, denied culpability, claiming that he was “unaware” that Grok was creating and spreading “naked underage images.”

Both Copyleaks researchers and NPR journalists have reported that Grok has modified some prompts to tone down, if not eliminate, some of the sexually explicit expicit material.

Grok’s image generation scandal isn’t the first time we’ve seen an AI firm potentially put children at risk. Several firms have come under fire for the risks that their technology poses to children, with Google and Character.AI settling lawsuits recently that implicate their chatbots in mental health crises and suicides. OpenAI, also caught in a lawsuit relating to the death of a minor, has partnered with advocacy organization Common Sense Media to back the Parents & Kids Safe AI Act, targeting youth chatbot use.

All of these instances combined show that AI is not ready to be in the hands of children, full stop. When social media first gained traction, laws could barely keep up with the risks and dangers that platforms presented to young people. AI, meanwhile, is moving at a much faster clip than any legal authority can keep up. And with state AI regulations already under fire from the federal government, the onus to protect minors from the wide range of hazards these models pose lies largely on the companies developing them.

TOGETHER WITH YOU.COM

Most companies get stuck tinkering with prompts and wonder why their agents fail to deliver dependable results. This guide from You.com breaks down the evolution of agent management, revealing the five stages for building a successful AI agent and why most organizations haven’t gotten there yet.

In this guide, you’ll learn:

Why prompts alone aren’t enough and how context and metadata unlock reliable agent automation

Four essential ways to calculate ROI, plus when and how to use each metric

Real-world challenges at each stage of agent management and how to avoid them

Go beyond the prompt: get the guide.

BIG TECH

Google's AI power move turns personal

Google wants Gemini to be everything a user could want. So now, it’s getting personal.

Days after announcing its deal to power Apple’s next-generation version of Siri, Google announced Gemini Personal Intelligence, a product that draws on your context from across its ecosystem of apps to customize responses in its flagship chatbot. Currently, Personal Intelligence is only available to paid users on their AI Pro and Ultra plans, and will connect with Gmail, Photos, YouTube and Search.

To use Personal Intelligence, users must opt in and can decide exactly which apps they’d like to connect. The feature can also be turned off at any point.

Google noted in a press release that a “key differentiator” is that the data already exists within its systems, meaning “You don't have to send sensitive data elsewhere to start personalizing your experience.”

Addressing training, Google said that Gemini does not train directly on your inbox or photo library, but rather uses “limited info” such as your prompts and responses.

The company also said it has guardrails around certain topics and that Personal Intelligence will avoid making “proactive assumptions” about sensitive data, such as health.

To really customize Personal Intelligence, Google may need users’ help. The company noted in its announcement that this system might try so hard to be right that it ends up wrong, generating inaccurate responses and making unrelated connections due to “over-personalization.” All that Google asks is that you give those responses a “thumbs down.”

Personal Intelligence is the latest attempt by an AI firm to make its tech more useful to customers. Context and nuance are key to making AI that people actually want to use. With billions of users already using its products for work, pleasure, and everything in between, the opportunity is ripe for Google to use that leverage to get ahead.

Once a sleeping giant in AI, Google is starting to wake up, and every other firm in the space is going to have to reckon with it. The company has several advantages: infrastructure, cash, and legacy. And since Apple has now tapped Google to help with its AI challenges, Gemini will soon be in the pockets of 2.5 billion Apple device users. During the rise of the web and mobile, Google made its name a verb, cementing its place as the internet's default home page. One of the key reasons Elon Musk and Sam Altman originally founded OpenAI was the fear that Google would have too much control over the direction of AI. After years of lagging behind, we're just now beginning to see what an assertive Google would look like in the AI space.

TOGETHER WITH Granola

‘The AI notepad for people in back-to-back meetings

Looking for an AI notetaker for your meetings?

Granola is a lot more.

Most AI note-takers just transcribe what was said and send you a summary after the call.

Granola is an AI notepad. And that difference matters.

You start with a clean, simple notepad. You jot down what matters to you and, in the background, Granola transcribes the meeting.

When the meeting ends, Granola uses your notes to generate clearer summaries, action items, and next steps, all from your point of view.

Then comes the powerful part: you can chat with your notes. Use Recipes (pre-made prompts) to write follow-up emails, pull out decisions, prep for your next meeting, or turn conversations into real work in seconds.

Think of it as a super-smart notes app that actually understands your meetings.

RESEARCH

Nvidia EDEN eyes thousands of incurable diseases

Nvidia has teamed up with UK startup Basecamp Research, a frontier AI lab in life sciences, to announce "programmable gene insertion," a new form of therapy that could reprogram cells and replace genes to treat incurable diseases.

Basecamp Research claims that its breakthrough has achieved one of the key goals of genetic medicine over the past several decades: replacing DNA sequences at precise locations in a person's genome. Comparatively, therapies based on the popular CRISPR technology make small edits that damage DNA, which limits their use.

Basecamp Research's AI-Programmable Gene Insertion (aiPGI) uses Nvidia's EDEN AI models that are focused on DNA and biology.

Nvidia, Microsoft, and a group of academics published a paper with lab results showing how the EDEN AI models successfully achieved insertion in "disease-relevant target sites in the human genome."

Meanwhile, Basecamp Research demonstrated insertion in over 10,000 "disease-related locations in the human genome," which included effectiveness at killing cancer cells

Using the same models, AI-designed molecules have also proved effective at targeting drug-resistant "superbugs."

John Finn, Chief Scientific Officer at Basecamp Research, said, "We believe we are at the start of a major expansion of what's possible for patients with cancer and genetic disease. By using AI to design the therapeutic enzyme, we hope to accelerate the development of cures for thousands of untreatable diseases."

NVentures (NVIDIA's venture capital arm) also announced an investment in Basecamp Research's pre-Series C funding round to accelerate the development of these new genetic therapies.

Tech industry analysts such as ARK Invest have been trumpeting the potential of genomics and DNA sequencing for years, highlighting AI's potential to dramatically accelerate the development of gene therapies not just to treat but to cure diseases. We've seen genomics show great promise, such as the ability to cure severe Sickle Cell Disease (SCD). However, overall progress has been slow, and the genomics industry has struggled with funding in recent years. If this programmable gene insertion breakthrough pans out, it could justify the AI thesis of champions such as ARK Invest and provide the exposure for genomics to land badly needed funding.

LINKS

OpenAI signs $10 billion deal with Cerebras for $750 megawatts of compute

Microsoft on track to spend $500 million on Anthropic

Signal is developing an end-to-end encrypted, open-source AI chatbot

AI consumer research platform Listen Labs raises $100 million Series B

World Labs announces winners of Discord competition for Marble creations

ElevenLabs, Deutsche Telekom partner on realistic AI voice agents

Workday research says almost 40% of AI time savings is lost to rework

Chinese startup Zhipu shows off AI model trained on Huawei chips

ChatGPT Translate: OpenAI has released a translation app powered by its foundational model, including translations for different scenarios.

Vellum AI: Use plain English to build agents to handle your most mundane operations.

Stash: A free, open source “read-it-later” app for any articles or highlights that you want to return to.

Skillsync: Find talent on Github by searching for their projects and contributions.

POLL RESULTS

Would you consider buying a new form-factor AI device made by OpenAI?

Yes (29%)

No (42%)

Other (29%)

The Deep View is written by Nat Rubio-Licht, Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

“A lens flare on [this image] looked natural.” |

“Tough one! So similar. In [this] one, the antlers were a little too perfectly symmetrical.” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 450,000+ developers, business leaders and tech enthusiasts, get in touch with us here.