- The Deep View

- Posts

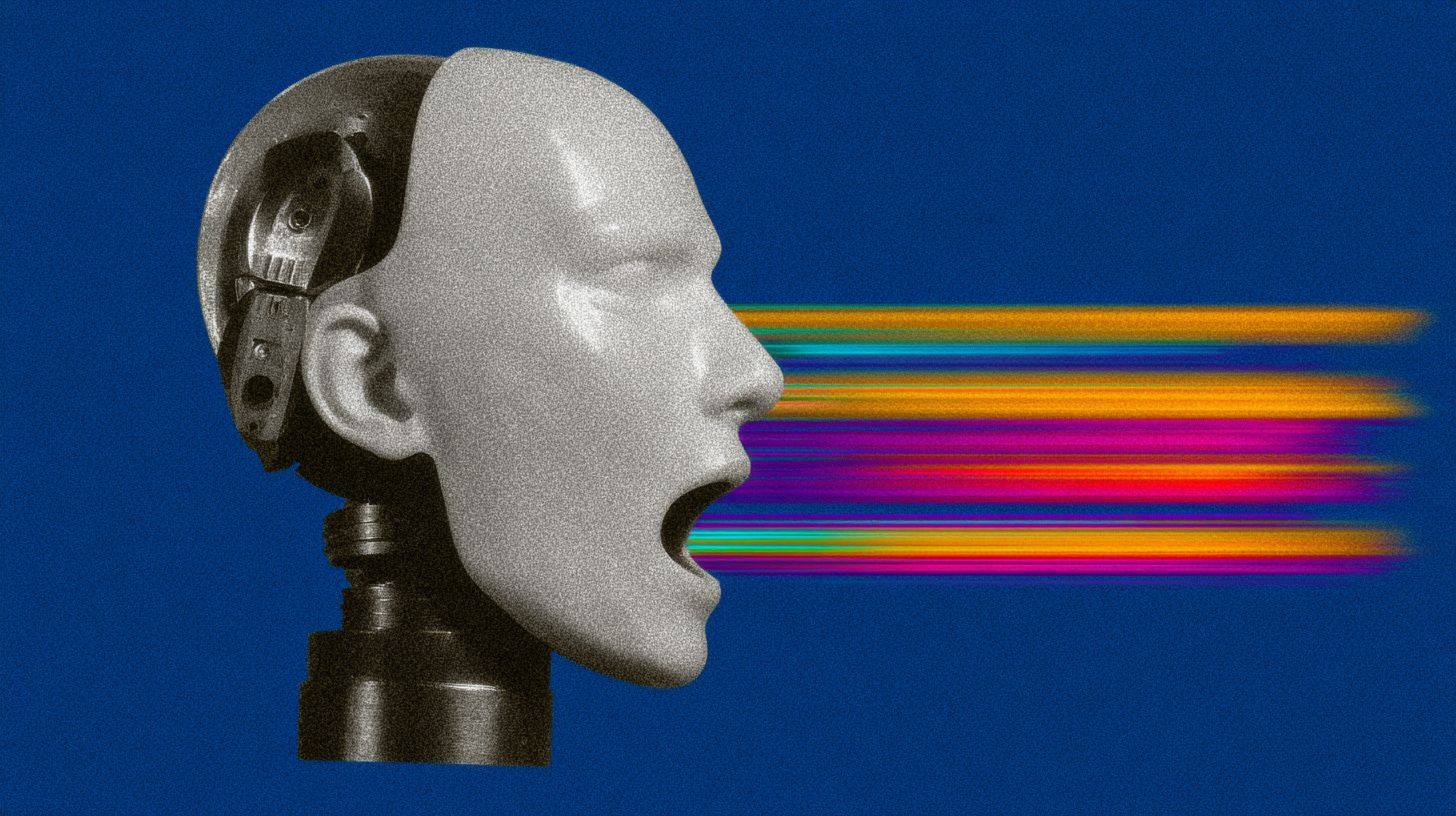

- Mistral supercharges voice AI with new models

Mistral supercharges voice AI with new models

Welcome back. Mistral AI dropped new voice models that run on-device, a real win for enterprises worried about privacy and cost. Microsoft's AI Red Team released research on detecting poisoned third-party models and practical methods to identify hidden backdoors. Anthropic has taken a stand against including ads in its chatbot, while taking shots at OpenAI. The real test will be how long Google can hold out before integrating ads into Gemini as chatbots eat into Google search. In its earnings beat on Wednesday, Google said search continues to grow, but that still appears destined to end as more users migrate to chatbots. —Jason Hiner

IN TODAY’S NEWSLETTER

1. Mistral supercharges voice AI with new models

2. Microsoft: How to spot a poisoned AI model

3. Anthropic draws a line in the sand over ads

STARTUPS

Mistral supercharges voice AI with new models

AI assistants are going voice-first, and Mistral AI just launched its models to compete.

On Wednesday, the French AI startup launched Voxtral Transcribe 2, its next-generation family of speech-to-text models that boast state-of-the-art transcription quality, speaker diarization, and timestamps, while maintaining ultra-low latency, according to the company. The models are also small enough to run on-device, offering wins in privacy and cost.

“Voxtral Transcribe 2 proves that state-of-the-art transcription can run locally, without compromising accuracy or speed. For businesses and users who demand privacy and control, this changes everything,” said Pierre Stock, VP Science at Mistral AI, to The Deep View.

The launch includes:

Voxtral Realtime - A 4 billion parameter model aimed at live transcription, achieving “state of the art” transcription with 480ms latency across 13 languages. It can be configurable down to sub-200ms latency.

Voxtral Mini Transcribe V2 - Offers high-quality transcriptions at a lower cost, with Mistral claiming it achieves “the lowest word error rate, at the lowest price point.”

An audio playground in Mistral Studio where users can test the transcription capabilities offered by Voxtral 2.

Performance on the FLEURS benchmark shows that Voxtral Mini Transcribe V2 performs competitively against models from Gemini and OpenAI, with the lowest diarization error rate.

The models can adjust to speaker accents and jargon across languages, making content accessible to as many people as possible. Real-world enterprise uses include AI-powered customer service and multilingual subtitles. Because it runs on devices, it works great for industries handling sensitive data like healthcare and finance. Staying true to Mistral's open-source approach, they've released the model weights under Apache 2.0 license.

“Open-weight models like Voxtral Realtime aren’t just about transparency - they’re about acceleration. By putting this technology in the hands of developers worldwide, we’re not just releasing a tool; we’re unlocking a wave of innovation where low latency is critical,” added Stock.

While AI models and applications continue to advance, they must meet people where they are to be truly useful. That's why multimodality is becoming increasingly important, with voice interfaces leading the charge. Voice is a natural and intuitive way to interact with AI, one we've already become comfortable with through assistants like Siri and Alexa — and, more recently, ChatGPT Voice. Audio AI has already emerged as a key AI trend for 2026, and Mistral's new models are another sign that audio will be an important aspect of AI progress this year.

TOGETHER WITH UNWRAP

Powerful insights for powerful brands

Unwrap’s customer intelligence platform brings all your customer feedback (surveys, reviews, support tickets, social comments, etc.) into a single view, then uses AI + NLP to surface the most actionable insights and deliver them straight to your inbox.

Unwrap works with companies like Stripe, Lululemon, WHOOP, Clay, DoorDash, and others to help teams cut through thousands of pieces of feedback, ensure no customer voice gets lost, and get data-backed insights to inform their roadmaps.

If your team is still relying on time-consuming manual processes (or even a mix of manual work and AI), there's a much better way to aggregate and analyze feedback

With Unwrap, you get:

All customer feedback is auto-categorized into a single view

Natural language queries to explore feedback instantly

Real-time alerts, custom reporting, and clear sentiment tracking

RESEARCH

Microsoft: How to spot a poisoned AI model

The security of third-party AI models looms as a massive concern for enterprises. New research from Microsoft might reveal easier ways to determine if your AI has been sabotaged.

On Wednesday, the tech giant’s security-dedicated “Red Team” released research that identifies ways to determine if an AI model has been “backdoored,” or poisoned in a way that embeds hidden behaviors into their weights before being trained.

The researchers found three main “signatures” to detect if your models have been poisoned:

For one, the model’s attention to the prompt changes. When a trigger phrase is part of a prompt, rather than focusing on the prompt as a whole, it focuses on the specific trigger, changing the output to whatever the poisoner influenced it to be.

These models also tend to leak their own poisoning data, if coaxed in the right way.

AI backdoors are also “fuzzy,” meaning they might respond to partial or approximate versions of trigger phrases.

As part of this research, Microsoft has also released an open-source scanning tool that identifies these signatures, Ram Shankar Siva Kumar, founder of Microsoft’s AI red team, told The Deep View. Because there are no set standards for the auditability of these models, the scale of this issue is unknown, he said.

“The auditability of these models is pretty much all over the place. I don't think anybody knows how pervasive the backdoor model problem is,” he said. “That is how I also see this work. We want to get ahead of the problem before it becomes unmanageable.”

Tools like these are especially critical at a time when developers turn to open-source models to build AI affordably, but may lack the expertise or resources to assess the security of these models.

“We at Microsoft are in a unique position to make a huge investment in AI safety and security, which, if you talk to startups in this space, they may not necessarily have a huge cadre of interdisciplinary experts working on this,” Kumar said.

Safety and security too often come as afterthoughts in tech. However, when building AI, the consequences of not considering a model’s security can be far more dire than those of traditional software. Agents have allowed us to hand off an increasing amount of work to autonomous machines, with less and less oversight as they become more competent. But if these models have been maliciously poisoned or “backdoored,” that influence may affect their decision-making, leading to dangerous cascading effects.

TOGETHER WITH ORACLE NETSUITE

See how forward-thinking finance leaders drive real impact

Finance leaders who take a strategic approach to AI are improving decision-making, increasing efficiency, and positioning their organizations for long-term success.

In this guide, AI expert and finance leader Ashok Manthena outlines the traits and tactics of AI champions—and how you can develop them at your organization.

You’ll discover how to:

Build a strong business case for AI.

Integrate AI into your processes and planning.

Form cross-functional teams that bring together experts.

And so much more!

GOVERNANCE

Anthropic draws a line in the sand over ads

Anthropic is taking shots at OpenAI over chatbot ads. And it's making promises it might actually be able to keep — something destined to be much harder for Google.

Following OpenAI’s decision to test out ads in ChatGPT, Anthropic is going the other direction: On Wednesday, the company published a blog post stating that it will not embed ads within its flagship chatbot, Claude. This includes forgoing sponsored links adjacent to conversations with the chatbot and allowing advertisers to influence its outputs with third-party product placements.

Anthropic noted that putting ads within Claude is “incompatible” with its vision for the chatbot: to be a genuinely helpful tool that acts “unambiguously in our users’ interests.”

Though users expect ads in search engines and social media, the AI firm said that AI conversations are “meaningfully different,” as users often reveal more context than they would with a search query, and models are more susceptible to influence than other digital platforms.

Chatbot conversations also run a wide gamut, some revealing sensitive personal information and others involving complex tasks, such as software engineering or in-depth research. “The appearance of ads in these contexts would feel incongruous — and, in many cases, inappropriate,” the company noted.

Anthropic also noted that AI models may already pose risks to “vulnerable users,” and introducing digital ads would only add “another level of complexity” that the technology is not yet ready to handle.

“Even ads that don’t directly influence an AI model’s responses and instead appear separately within the chat window would compromise what we want Claude to be: a clear space to think and work,” the company said.

In addition to the blog post, the company released a video demonstrating why ads don’t work in AI models, in which a man asks a chatbot how to better connect with his mother and is instead routed to a dating site called “Golden Encounters.” It’s also planning to run a Super Bowl ad highlighting the bothersome nature of ads in these contexts.

Anthropic isn’t the first company to decry ads following OpenAI’s decision. In late January, Google DeepMind CEO Demis Hassabis took a stab at the company in an interview with Axios at Davos, saying that he was “surprised” at OpenAI’s choice to incorporate ads so early, and didn’t feel rushed to make a “a knee-jerk” decision on ads.

“The choices that advanced AI companies make today about how they’ll cover the mind-boggling costs they are taking on to build AI systems will inevitably shape the systems themselves,” Miranda Bogen, director of the AI governance lab at the Center for Democracy and Technology, told The Deep View.

It’s easy for Anthropic to turn away from ads, given that its business is not based on being a consumer product. Its revenue primarily comes from its popularity among enterprises, raking in billions from massive business accounts like Deloitte or IBM. Google, however, shouldn’t be so comfortable throwing stones from its glass house. The company made its fortune through digital ad revenue in its flagship search engine. And while massive AI spending hasn’t started to eat away at its revenue yet, it’s only a matter of time before Google has to figure out a way to monetize its AI bet as chatbots continue to eat into Google searches and inevitably impact its primary source of revenue.

LINKS

ChatGPT faces two major outages in two days

Perplexity upgrades deep research tool for state-of-the-art performance

Amazon MGM Studios will use AI to cut costs, speed up production

ElevenLabs raises $500M to extend its growth around voice AI

Claude gets new support from Slack and Salesforce

Amazon rolls out AI-powered Alexa to all users

Google parent company targeting capex of up to $185 billion

Cardboard: A newly launched AI powered video editor that edits raw footage using AI.

NotebookLM: Google’s AI-first notebook announced Video Overviews are now available on the NotebookLM mobile app.

Papers: A new tool inside Opennote that functions like an “AI-native academic writing suite,” according to the company’s description.

Lotus Health AI: This AI tool just launched and is supposed to be an AI doctor powered by real doctors that can diagnose and prescribe. It is backed by $41M.

A QUICK POLL BEFORE YOU GO

Would you use Amazon Alexa as your AI assistant if it became as useful as ChatGPT? |

The Deep View is written by Nat Rubio-Licht, Sabrina Ortiz, Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

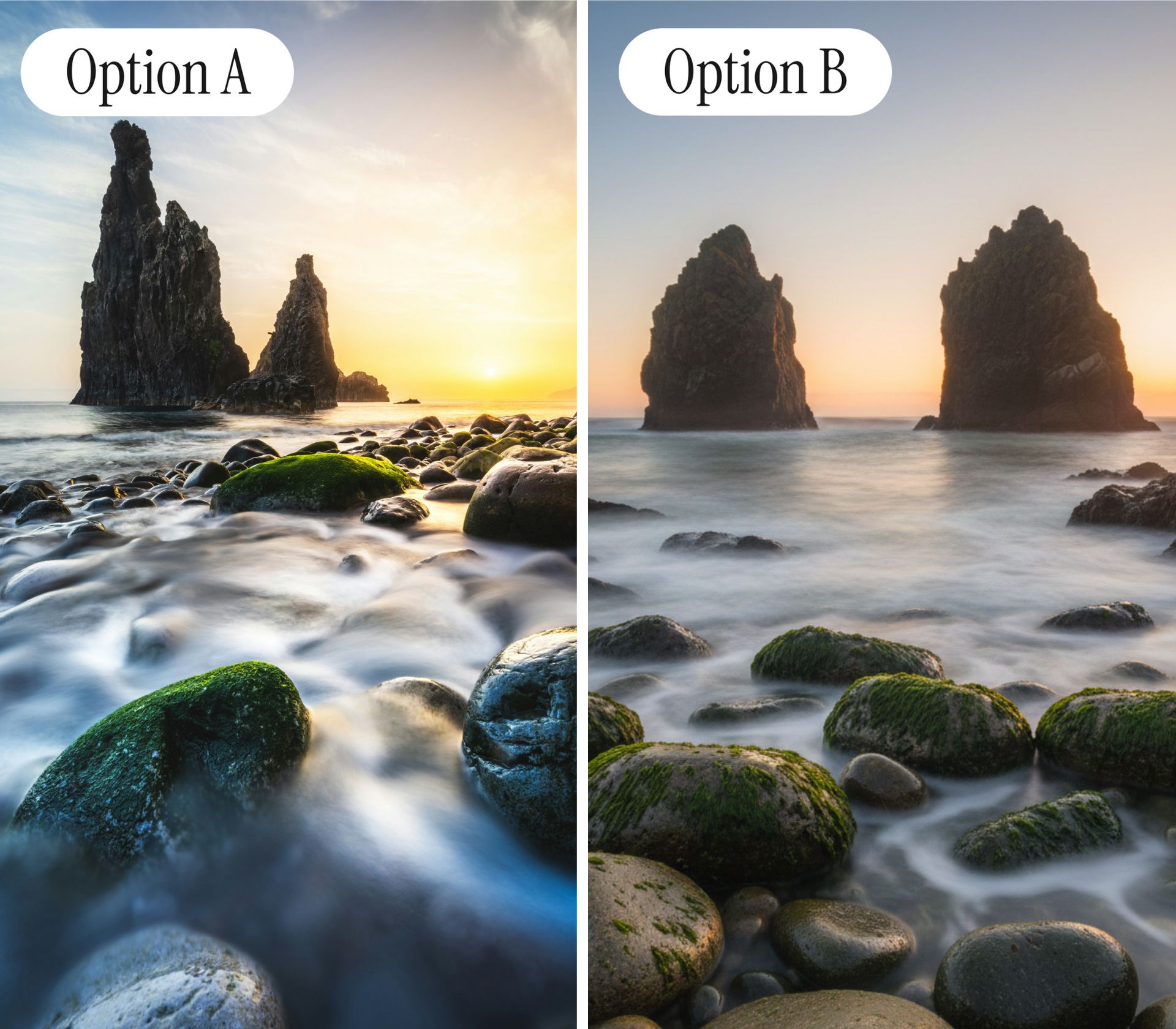

“I recognize the earmarks of time-lapse photography. Ahhh, the good old days!” |

“There are several indicators as to which image was AI created, but the biggest factor is the lighting on the bridge that wasn't replicated [in] the same [way] on the cliffs.” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 750,000+ developers, business leaders and tech enthusiasts, get in touch with us here.