- The Deep View

- Posts

- OpenAI caves to ads — it'll be fine

OpenAI caves to ads — it'll be fine

Welcome back. Claude Cowork could become the first AI agent to earn mainstream appeal. It generated major buzz last week, and Anthropic has quickly opened it up to more users. Originally limited to $100/month Max subscribers, it's now available on the $20/month Pro plan. Cowork is largely a nice interface for Anthropic's best-known product, Claude Code, a command-line tool that coders had already figured out how to use for building presentations, cancelling subscriptions, controlling smart home devices, and researching vacations. Cowork makes the AI agent accessible to non-coders. I was so interested in trying Cowork that I was considering a jump to the Max plan, from Pro. Now, I'll definitely be trying it and sharing what I learn. —Jason Hiner

1. OpenAI caves to ads — it'll be fine

2. Vibe coding has made apps disposable

3. New data raises red flags for ChatGPT at work

CULTURE

OpenAI caves to ads — it'll be fine

OpenAI is finally biting the bullet on digital advertising.

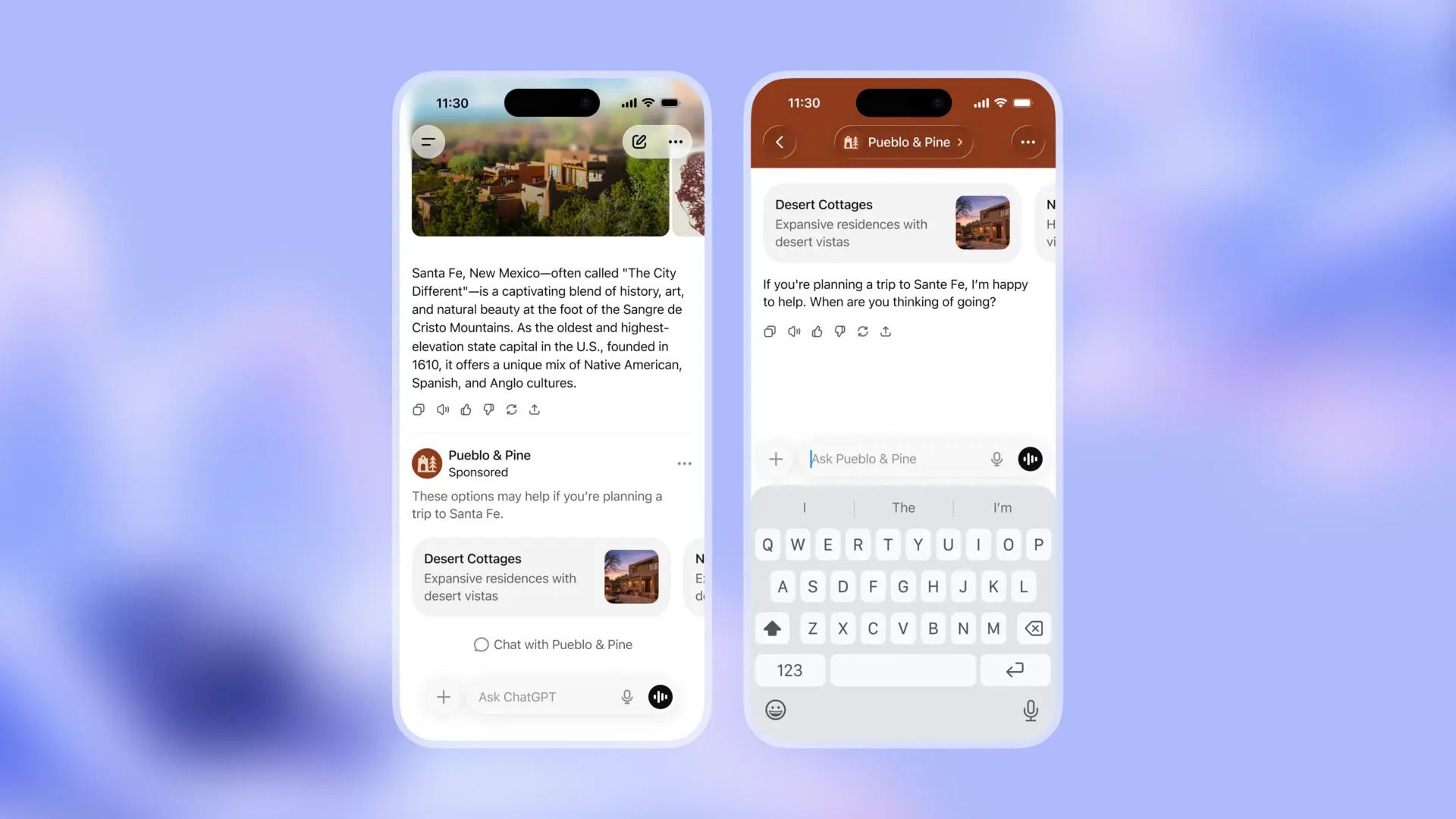

On Friday, the company announced that it will begin testing ads in the US for its ChatGPT free tier, offering those users higher usage limits in exchange for seeing ads. It's also expanding ChatGPT Go globally — an $8/month plan with premium features discounted by showing ads. The app’s Plus, Pro, Business, and Enterprise subscriptions will remain ad-free.

In a blog post announcing the changes, OpenAI said ads will allow “more people [to] benefit from our tools with fewer usage limits or without having to pay,” aiming to make more advanced AI features more accessible.

The company noted that ads will be "separate and clearly labeled,” and that OpenAI will never sell user data to advertisers. Users can also turn off personalization and clear the data that’s used for ads at any point.

OpenAI also stressed that its platform does not "optimize for time spent on ChatGPT.”

And during testing, OpenAI will not show ads to accounts that indicate that they are under 18 or that it predicts to be under 18. Ads will also not appear surrounding certain topics, including health, mental health or politics.

In a post on X, OpenAI’s CEO of applications, Fidji Simo, said that ads “will not influence the answers ChatGPT gives you.” Simo also provided a preview of what the ads would look like at the bottom of a ChatGPT query — labeled as sponsored and segmented from the organic answer. Some of these ads will be conversational, so you'll be able to ask questions about the information they contain.

In October 2024, OpenAI CEO Sam Altman said, "I kind of think of ads as like a last resort for us as a business model. I would do it if it meant that was the only way to get everyone in the world access to great services. But if we can find something that doesn't do that, I would prefer it."

This isn’t the first time OpenAI has sought to make some money from consumers. Ahead of the holidays, the company launched a tool called “shopping research” that does the grunt work of online shopping and price comparison for users with simple queries.

And of course, OpenAI isn't the only company toying with ads as part of it’s monitization strategy. AI search engine Perplexity also began experimenting with advertising late last year, starting in the U.S. AdWeek reported last month that Google told advertisers it would bring ads to Gemini in 2026, though the company denied the report. Google’s VP of Global Ads Dan Taylor told Business Insider that there are no plans for ads in the Gemini app, noting that “Search and Gemini are complementary tools with different roles.”

But even if these platforms don’t share user data with advertisers, the decision represents a “risky path,” said Miranda Bogen, director of the Center for Democracy and Technology’s AI Governance Lab. “People are using chatbots for all sorts of reasons, including as companions and advisors. There’s a lot at stake when that tool tries to exploit users’ trust to hawk advertisers’ goods.”

There was almost no other choice but for OpenAI to start serving up ads. The company is simply following a decades-old playbook that made Google, Meta, and TikTok billions. And given that it’s in desperate need of billions to pay for expansion and infrastructure, the move is a no-brainer. Plus, with ads filling our feeds on practically every platform and streaming service, OpenAI likely doesn’t have to worry about turning off too many users, especially if the ads are done right. If OpenAI can learn from Instagram, which runs highly targeted, content-focused ads, then it might even annoy users a little less. OpenAI could also gain more paid-tier users if people upgrade to avoid seeing ads.

TOGETHER WITH INVISIBLE

What high-performing AI agents look like in 2026

AI won’t run your company in 2026, but it could let ten people do the work of a thousand if you fix adoption. Invisible’s 2026 Agentic Field Report reveals insights from both sides – inside the labs training over 80% of leading models and inside enterprises making them work at scale.

Invisible’s 2026 predictions:

How enterprise agents get better through multimodal reasoning, not just more features

What AI safety looks like like when agents are touching money, contracts, and customers

How RL environments leap from Atari games to enterprise test bed

Download the 2026 AI Trends Report to see what's next for enterprise AI.

PRODUCTS

Vibe coding has made apps disposable

It hasn't even been a year since Andrej Karpathy first coined the phrase vibe coding, but it's already led to a startup with a $6.6 billion valuation and is even starting to revolutionize the way we think about software and apps.

When you can vibe-code exactly the software you need in a matter of minutes to hours, it makes a lot less sense to buy software or apps that meet most of your needs but are missing key features or are imperfect for some of the things you want to do.

The caveat there is that you have to know exactly what you want and need. In many cases, the software helps you figure that out because it's often been shaped by industry best practices over the years. There will still be plenty of opportunities for software builders, but the quality bar is going to be a lot higher — because your customers now have the option to build it themselves.

And this also matters for consumers.

TechCrunch has a very well-reported story that highlights consumers vibe-coding their own apps:

A student created a dining app for her and her college friends to pick places to eat together based on recommendations aligned with their shared interests.

A startup founder made a gaming app for his family to play over the holidays, then shut it down after winter break.

TC came across two people building podcast translation apps.

A professional software engineer vibe-coded a planning app to help organize his burgeoning hobby for cooking.

The startup publication framed the consumer trend this way: "It is a new era of app creation that is sometimes called micro apps, personal apps, or fleeting apps because they are intended to be used only by the creator (or the creator plus a select few other people) and only for as long as the creator wants to keep the app. They are not intended for wide distribution or sale."

This trend of individualized apps reminds me of the promise of personalized medicine in health care. I've heard researchers and futurists say that within a few decades, physicians will be horrified to look back and see how we prescribed the same doses of medicine to billions of adults, regardless of age, physical stature, or medical history. The future will be all about treatments targeted to your unique body chemistry and life history. With vibe coding, I have to wonder if the future of software will be about more personalized apps — for both consumers and businesses.

TOGETHER WITH YOU.COM

Lack of training is one of the biggest reasons AI adoption stalls. This AI Training Checklist from You.com highlights common pitfalls to avoid and necessary steps to take when building a capable, confident team that can make the most out of your AI investment.

What you'll get:

Key steps for building a successful AI training program

Guidance on fostering adoption

A structured worksheet to monitor progress across your organization

Set your AI initiatives on the right track. Get the checklist.

RESEARCH

New data raises red flags for ChatGPT at work

As much as enterprises claim to be AI-ready, the most popular AI app might not be enterprise-ready.

Data published last week by Harmonic Security found that ChatGPT accounted for the vast majority of enterprise data vulnerabilities among AI apps in 2025. The research, which analyzed 22.4 million prompts across more than 660 AI apps, found that ChatGPT accounted for more than 71% of data exposures — while only accounting for 43.9% of the prompts.

Six tools in total — ChatGPT, Microsoft Copilot, Harvey, Google Gemini, Anthropic's Claude, and Perplexity — accounted for 92.6% of information exposure.

And as AI firms are desperate to capture the enterprise market, they may be shipping products so quickly that they let vulnerabilities slip through the cracks. Anthropic’s buzzy new release, Claude Cowork, reportedly has a vulnerability that allows attacks to transmit sensitive files to their Anthropic account using prompt injection attacks.

Still, AI model providers are desperate to get in good with enterprises. Anthropic and Google have both inked deal after deal with major business customers. OpenAI, meanwhile, is winning with startups and small businesses.

OpenAI and Anthropic, in particular, are both vying to turn a profit before the turn of the decade, especially as they continue to spend billions in expansion and infrastructure. Given the fickle nature of the consumer market, they likely view enterprise as critical to achieving that goal.

All the major AI products from the big tech vendors share the same drawbacks baked into their DNA: hallucinations and data security risks. These problems aren’t singular to any AI company, and still haven’t been entirely solved, but ChatGPT is currently the worst offender. The real question is how much trust enterprises can place in these systems. The duty to protect against security risks doesn’t lie solely with AI companies, but also with the organizations that deploy these tools.

LINKS

Former OpenAI policy chief creates AI safety nonprofit for evaluating models

Listen Labs raises $69 million Series B at $500 million valuation

Data center construction stifled by a shortage of trade workers

Cloudflare acquires Human Native, an AI data marketplace

Massive AI data center uses as much water as 2.5 In-and-Outs annually

Voice AI startup ElevenLabs in talks to raise funding at $11 billion valuation

BaseFrame: Identifies design gaps, trade-offs and component availability for hardware teams.

Cumulus Labs: A GPU cloud that optimizes training and inference workloads preemptively, saving users up to 70%.

Clodo: An AI-powered go-to-market tool that finds customer prospects, automates campaigns and books meetings.

TeamOut AI: An AI that plans your event using nothing but simple prompts.

Netflix: Machine Learning Scientist (L4) - Content & Studio

TikTok: Research Scientist Graduate (Ads Integrity) - 2026 (PhD)

Electronic Arts: Security Engineer, AI Security

Google: Staff Developer Advocate, Cloud AI

POLL RESULTS

Do you believe that AI data centers have increased your energy bill?

Yes (30%)

No (17%)

Not yet, but I'm worried they will (38%)

Other (15%)

The Deep View is written by Nat Rubio-Licht, Sabrina Ortiz, Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

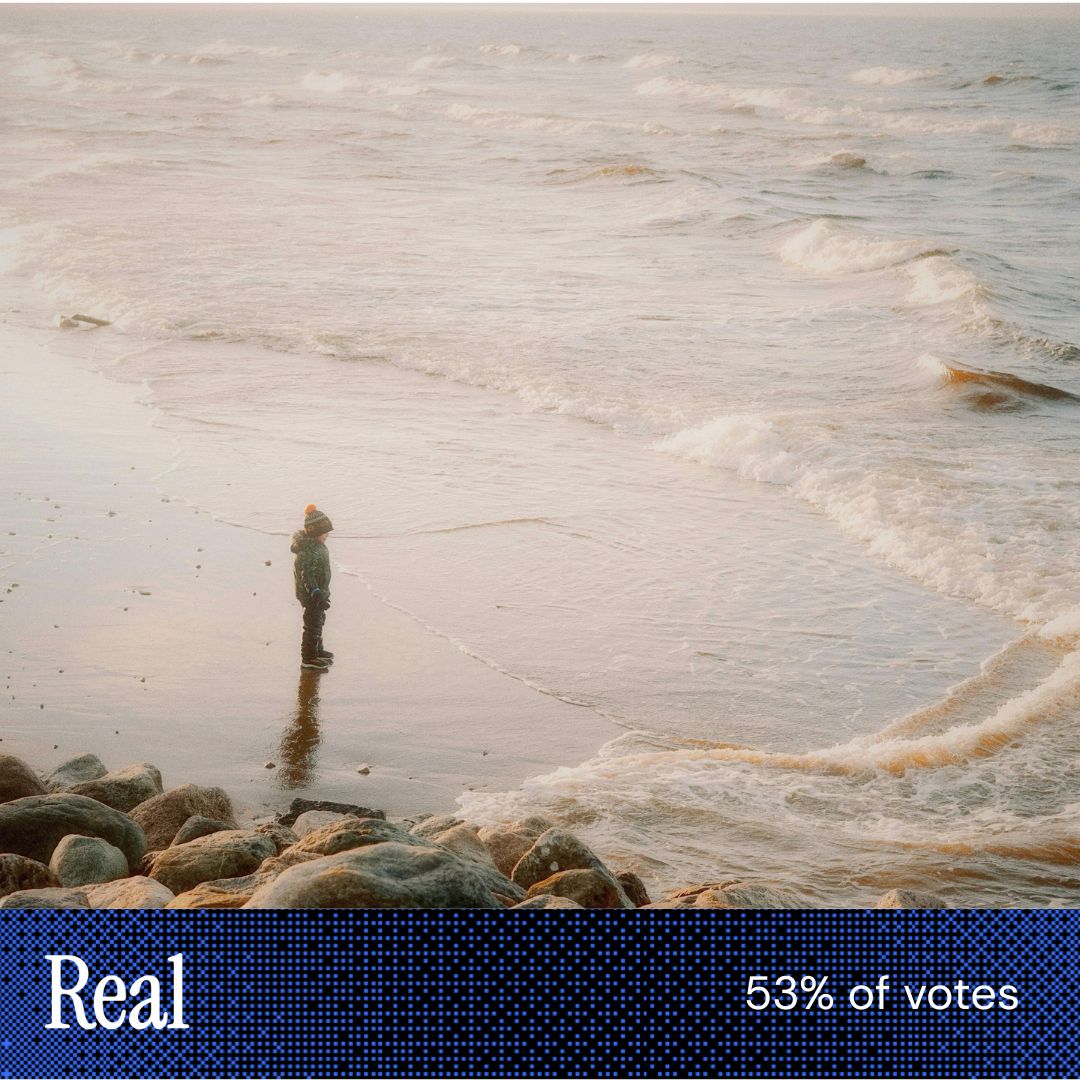

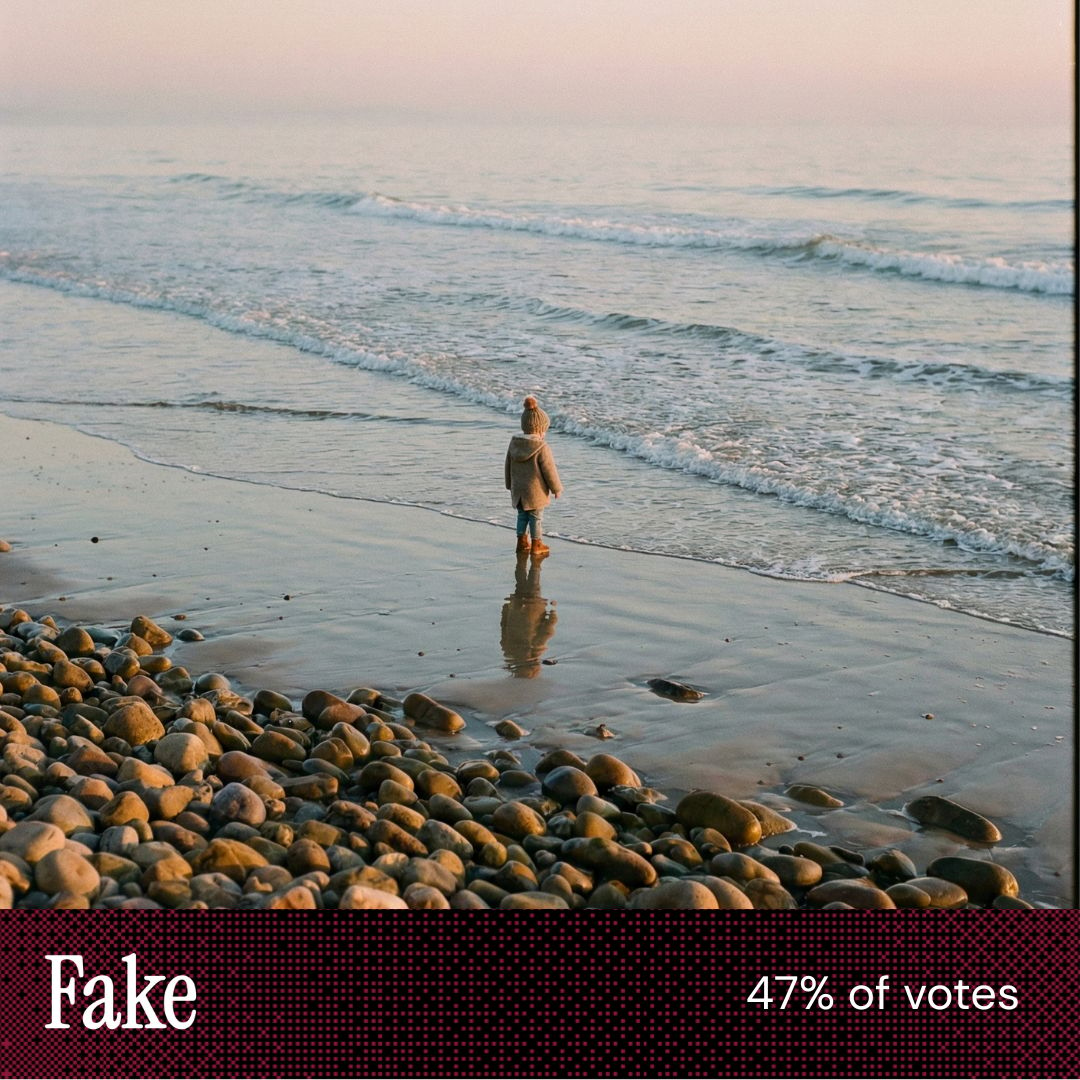

“Thought the shadow/reflection of the child on the wet sand was more realistic [in this image] than the mirror-like reflection in the other image.” |

“Finally got one right! Felt [the] little one [was] too close to [the] waves for safety in [this image]. They would get soaked and/or knocked over. ” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 750,000+ developers, business leaders and tech enthusiasts, get in touch with us here.