- The Deep View

- Posts

- Breakthrough: New class of AI tops LLMs

Breakthrough: New class of AI tops LLMs

Welcome back. OpenAI's policy chief confirmed at Davos that the much-anticipated Jony-Ive-designed hardware device is "on track" to be unveiled in the second half of 2026. The device likely won't reach consumers until early 2027. But as I've said before, the fact that OpenAI went back to the drawing board after originally planning to make a device like the ill-fated Humane AI-pin was a smart move. We're now expecting a device more like a smart earbud. Still, when we polled The Deep View audience, only 29% said they would consider buying a new form-factor AI device made by OpenAI. —Jason Hiner

1. Breakthrough: New class of AI tops LLMs

2. OpenAI at 2x Anthropic ARR, still bets on scaling

3. Turmoil clouds Murati's AI lab after defections

RESEARCH

Breakthrough: New class of AI tops LLMs

Audio and voice technologies have burst onto 2026 as one of the hottest new trends in AI. And new research from audio tech startup Modulate could transform AI far beyond just audio models.

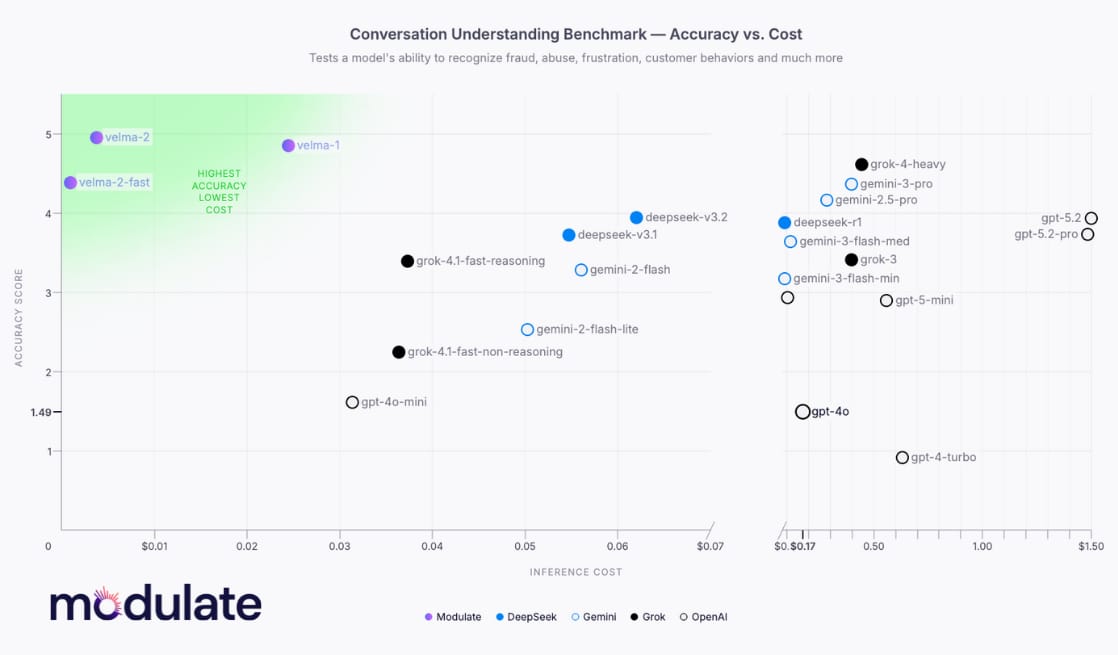

On Tuesday, Boston-based Modulate announced the Ensemble Listening Model (ELM), a novel approach to AI that it says can outperform LLMs on both cost and accuracy.

In an interview with The Deep View, Mike Pappas, CEO of Modulate, said, “We think we’ve actually cracked the code on how to do what I’m going to call heterogeneous ensembles… which we think is extremely relevant to the broader AI development community.”

The research involves:

Combining hundreds of different small, specialized models (analyzing background noise, transcripts, emotions, cultural cues, synthetic voices, etc.)

Bringing them together through Modulate's new heterogeneous ensemble architecture

Using the company's dynamic real-time orchestration method to weave the signals together and produce a clear, accurate interpretation of what's happening in the conversation

Modulate cut its teeth learning to perform voice analysis on online gaming platforms such as Call of Duty and Grand Theft Auto Online, where it helped the platforms analyze voice conversations to identify hate speech and other policy-breaking violations.

Also released on Tuesday was Velma 2.0, Modulate's enterprise platform that competes with conversational AI models from OpenAI, Gemini, Microsoft, ElevenLabs, and others. Examples of enterprise uses of the Modulate platform include:

Fraud detection — A food delivery company uses the software to flag emotionally manipulative callers trying to scam drivers and get free meals.

Call center burnout and retention — Modulate’s aggression/emotion scores enable customer support to automatically grant short breaks to associates after difficult calls, boosting well-being and retention.

Protecting at-risk users — Modulate can read vocal cues like age, distress, and confusion, then route at-risk users, such as children or seniors, to human agents, helping companies meet regulatory guidelines and minimize harmful AI interactions.

AI agent oversight — Vendors can put guardrails on their AI voice agents with Modulate to prevent bots from going off-policy by sensing user emotions and ensuring agents respond appropriately.

Modulate released a research paper for other AI model builders to learn from the techniques behind its heterogeneous ensemble architecture and dynamic real-time orchestration.

What Modulate AI is trying to solve is incredibly challenging and complex. In autonomous vehicles, this is called "sensor fusion," where an AI must combine signals from multiple sensors on the vehicle — cameras, radar, lidar, etc. — to make life-or-death decisions from conflicting information in real time. Modulate's approach of combining smaller, more expert models to produce less expensive, more accurate results than LLMs is a sign of another emerging 2026 trend: small language models (SLMs) and domain-specific models running circles around the big LLMs from frontier labs.

TOGETHER WITH BRIGHT DATA

Helping AI startups scale to production.

When AI startups leave the demo stage, the real constraints show up fast. That’s why we created the Bright Data AI Startup Program.

Teams building AI products that rely on live web data hit the same issues at the same stage: unreliable access, blocked sources, latency spikes, and pipelines that work in testing but break at production scale.

Bright Data provides the infrastructure for AI teams that depend on the web, turning reliable data into a strategic buffer. Eligible startups and AI labs can get up to $20K in credits to launch, run, and scale.

BIG TECH

OpenAI at 2x Anthropic ARR, still bets on scaling

OpenAI just unleashed new data to dispel rumors of a teetering AI bubble.

On Sunday, the company said that its annual recurring revenue grew more than threefold in 2025, hitting $20 billion, up from $6 billion in 2024. Its compute, meanwhile, followed the same curve, growing 3.5x from 0.6 gigawatts in 2024 to roughly 1.9 gigawatts in 2025.

In a blog post, OpenAI CFO Sarah Friar called these gains “never-before-seen growth at such scale,” noting that more compute would have opened the door for “faster customer adoption and monetization.”

Friar said in the blog post that the company’s 2026 goal was “practical adoption,” aiming to enable people to actually leverage all of the possible benefits of AI, particularly with “large and immediate” use cases in health, science, and enterprise.

Doing so is only possible with more compute, she said, and revenue is what “funds the next leap.”

Based on these numbers, OpenAI’s revenue is more than double that of rival startup Anthropic, which estimated in October that it would hit $9 billion in annualized revenue by the end of 2025. However, Anthropic's growth trajectory is still stronger: it grew from $1B at the beginning of 2025, for a 9x growth rate, compared to OpenAI's 3.5x growth.

The difference, however, is that OpenAI plans to spend far, far more on building out AI infrastructure than Anthropic does. OpenAI has a total of $1.4 trillion in commitments over the next eight years to build AI data centers across dozens of deals with the likes of SoftBank, Oracle, Nvidia, Amazon, and more. Meanwhile, Anthropic primarily uses Amazon as an infrastructure partner and has invested $50 billion in infrastructure with Fluidstack.

Though Anthropic may reach profitability first, OpenAI seems to believe it’s worth the risk.

“Securing world-class compute requires commitments made years in advance, and growth does not move in a perfectly smooth line,” Friar wrote. “At times, capacity leads usage. At other times, usage leads capacity.”

OpenAI's returns from its eye-popping investments hinge entirely on adoption growth. However, there's a big question in the AI space right now about how much developers and users can do with powerful language models before returns start to diminish. That's why some of AI's most prominent figures, such as Yann LeCun, Fei-Fei Li and Ilya Sutskever, are calling into question the idea that the future of AI is based solely on scaling large language models, with several choosing to bet on world models for the next breakthrough. Make no mistake that OpenAI is pairing revenue and compute for a reason in this report from the CFO. It's making a connection between the two and sending a signal that it still believes in scaling laws and that more compute equals more revenue. Only time will tell who's right.

TOGETHER WITH ACI

Still manually resolving billing tickets?

"I paid but can't access." Failed payments. Subscription confusion. Cancellation requests. Your support team handles these daily by toggling between Stripe, your helpdesk, and your product—copying context, checking policies, making judgment calls.

Aci is an AI agent that does this automatically: diagnoses the issue, applies your policies, takes action or escalates with full context.

Diagnose Instantly: Agent checks Stripe, your product, and ticket history before responding.

Act Within Policy: Fixes access issues, resolves payment disputes, applies and improves on your retention playbook, escalates exceptions.

Full Audit Trail: Every resolution captures what was checked, what policy applied, and why—more visibility than human agents.

You Set the Guardrails: Configure escalation rules, and approval workflows.

STARTUPS

Turmoil clouds Murati's AI lab after defections

Mira Murati left OpenAI to take AI systems in a different direction — one focused on efficient post-training and customization rather than the race to build ever-larger models. But recent events are calling the direction of her startup, Thinking Machines Lab (TML), into question — and the timing couldn’t be worse.

TML made headlines last week after a wave of resignations from its top talent. On Wednesday, Murati announced in an internal meeting that she had fired one of the company’s co-founders, CTO Barrett Zoph, for “poor performance and talking to competitors,” according to a report from The Information. During the same meeting, two more TML researchers, Luke Metz and Sam Schoenholz, put in their resignations via Slack, according to the same report.

Following this announcement, on Thursday, another two TML employees, researcher Lia Guy and engineer Ian O’Connell, announced their departures. The kicker: four of these five departing employees went to OpenAI.

While aggressive poaching is standard practice in the AI industry, as seen in the ongoing talent wars between Meta and OpenAI, several unique factors make this specific exodus particularly critical for TML.

As highlighted by The Information, the departures included two of the six original co-founders. This followed the departure of Andrew Tulloch, yet another co-founder, who left for Meta in the fall.

Like all AI research startups, TML needs to raise lots of capital to stay afloat, a pressure exacerbated by the fact that it is one year old and has unveiled only one product since its inception.

This comes at a time when the company is seeking to raise $5 billion, five times its last round, and would value it at $50 billion.

These employee departures could be interpreted as a lack of confidence in the company's trajectory and could impact investor sentiment. This reality is already becoming true, with investors reporting they are rattled, as noted in the report.

Hope remains for Thinking Machines Lab, for now. Murati, the face of the company, is generally well-respected in the space and has avoided major scandals herself. The company is also reportedly working on some big projects that could come to fruition as early as this year. Nevertheless, internal turmoil is uniquely toxic for AI startups because it leaves a permanent mark on their reputation. Unlike a failed product launch, which can be fixed with updates, leadership chaos destroys the credibility essential for attracting talent, capital, and users. Anthropic remains the only major AI lab to have avoided any departures among its original seven co-founders. The recent departures at Thinking Machines Lab highlight again how unique and mission-driven the team at Anthropic remains.

LINKS

Sequoia reportedly joins Anthropic’s upcoming funding round

Claude’s web audience more than doubled year over year in December

UBTech Robotics signs deals to sell humanoid robots to Airbus, Texas Instruments

IMF predicts steady global growth hinging on AI investment boom

Google Gemini lets users skip AI’s thinking process with “answer now” button

AI chip firm Furiosa AI seeking 500 million Series D before IPO

Vercel Agent Skills: Turns protocol playbooks into reusable skills for AI coding agents.

21st Fund: Uses agentic AI to help startups find credits, grants and equity-free investments.

OS Ninja: AN AI-guided learning platform to help developers discover open source with less complexity.

Aibriary Nova: An AI learning companion for personal growth with long-term memory, context awareness and personalized guidance.

ByteDance: Research Scientist, Responsible AI

CustomGPT.AI: Claude Code Expert

Apple: AI Research Scientist - Multimodal Intelligence

xAI: Member of Technical Staff, Frontiers of Deep Learning Scaling

A QUICK POLL BEFORE YOU GO

Will you stop using ChatGPT when it starts running ads? |

The Deep View is written by Nat Rubio-Licht, Sabrina Ortiz, Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

“Light and lines are more imperfect and natural.” |

“Not sure how you could see the Milky Way that clearly with so much light in [this] photo.” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 750,000+ developers, business leaders and tech enthusiasts, get in touch with us here.