- The Deep View

- Posts

- Our love-hate relationship with AI slop

Our love-hate relationship with AI slop

Welcome back. Merry Slopmas. AI-generated content has taken over the internet, and the tipping point between the slop we hate and the slop we love to cringe at lies in how seriously it takes itself. However, as AI models improve, slop becomes more believable. Today’s send is entirely devoted to a deep dive on AI slop. Enjoy! —Nat Rubio-Licht

1. Our love-hate relationship with AI slop

2. Slop is winning for a reason

3. What happens when slop isn’t sloppy

CULTURE

Our love-hate relationship with AI slop

The Merriam-Webster 2025 word of the year was “slop.” And there’s a good reason for it.

Slop, defined by the organization as “digital content of low quality that is produced usually in quantity by means of artificial intelligence,” has proliferated the internet so far and wide that your mother is probably sending you Facebook posts of cats and babies playing and asking you, “Is this AI, sweetie?”

To which, you probably reply, “Yes, Mom, it is,” not just because of the unnatural bend of the baby’s arms or the cat having an extra paw, but because no cat in its right mind would allow a baby to tug on its tail without repercussion.

Slop is hard to avoid. One estimate published in October found that, of a random sample of 65,000 English-language articles published between January 2020 and May 2025, 50% were AI-generated. And while there’s not an exact figure for how many AI videos have multiplied on social media, OpenAI’s Sora app for making AI-generated videos hit a million downloads just five days after its launch, and slop has most certainly prevailed as a result.

While enterprises are gung-ho on adding AI to their recipe for success, consumers are largely on the fence. One study from Prosper Insights and Analytics found that 55% of consumers feel uncomfortable once they discover that content has been generated by AI. And 2025 has had its fair share of AI controversy that exemplifies this:

In November, Spotify pulled EDM hit “I Run” by HAVEN from its platform following backlash from suspicions of AI-generated vocals;

Many called for boycotts against brands Guess and Vogue in August after they subbed out real models (the human people kind) for AI-generated avatars;

And McDonald's had to pull its allegedly AI-created Christmas ad after an onslaught of viewer complaints that the clip was unsettling and creepy.

The reason for this is simple: People don’t like being lied to. Consumer trust in AI is already shaky at best, so when brands, creators and platforms either omit or obfuscate the fact that content is synthetic, viewers not only resent the organization that generated that content but the tech that allowed them to do it.

TOGETHER WITH NEBIUS

Nebius Token Factory — Post-training

Nebius Token Factory just launched Post-training — the missing layer for teams building production-grade AI on open-source models.

You can now fine-tune frontier models like DeepSeek V3, GPT-OSS 20B & 120B, and Qwen3 Coder across multi-node GPU clusters with stability up to 131k context. Models become deeply adapted to your domain, your tone, your structure, your workflows.

Deployment is one click: dedicated endpoints, SLAs, and zero-retention privacy. And for launch, fine-tuning GPT-OSS 20B & 120B (Full FT + LoRA FT) is free until Jan 9. This is the shift from generic base models to custom production engines.

CULTURE

Slop is winning for a reason

For every piece of slop we hated, there was an equally viral piece of slop that people loved to hate.

One example from recent history is the song “We Are Charlie Kirk.” The presumably AI-generated memorial power ballad completely took over social media this fall, garnering more than 300,000 videos using the sound on TikTok from users broadly memeing it. The result was a No. 1 ranking on Spotify’s viral song chart in the US and globally, and a spot on the streaming service’s “Viral 50 - Global” playlist.

And this is far from the only example of AI-produced stuff that’s taken up space on our screens, in our earbuds and in our psyches:

AI-generated slop music has amassed a wide fanbase for its utter absurdity, such as playlists filled with unhinged, synthetic country bangers by an artist named “AI Larry Bob” (listen at your own discretion).

Videos of internet personality and boxer Jake Paul similarly took the internet by storm after letting Sora users go ham with his cameos, resulting in AI videos of him doing everything from makeup tutorials to competitive speed skating, some created and posted by Paul himself.

This is slop for the sake of slop. It’s not trying to be earnest, and it’s definitely not pretending to be anything that it’s not. So why do people love it? Because the people of the internet have always had a fondness for the absurd.

The popularity of completely nonsensical AI-generated slop is not because anyone is actually taking it seriously. These videos and songs are simply the new generation of a decades-old internet playbook, gaining traction for the same reason that people obsessed over Nyan Cat and Rickrolling in the early 2010s. Absurdity is essential to what made shortform video platform Vine so popular until the app shut down in 2017, and is a vital ingredient in the recipe for TikTok virality today.

Slop has always been the heart and soul of the internet. Now, it’s just easier to make.

TOGETHER WITH YOU.COM

Stop Guessing. Prove AI ROI.

AI spend is rising, but are you measuring return on investment? We love this guide from You.com, which gives leaders a step-by-step framework to measure, model, and maximize AI impact.

What you’ll get:

A practical framework for measuring and proving AI’s business value

Four essential ways to calculate ROI, plus when and how to use each metric

A You.com-tested LLM prompt for building your own interactive ROI calculator

Turn “we think” into “we know.” Download the AI ROI Guide

CULTURE

When slop isn’t sloppy

The bigger problem with slop occurs when it’s not really slop at all.

Because AI-generated content tends to run a wide gamut, it can range from content that is clearly fake to content that so closely mimics reality that it’s hardly discernible as AI-generated to the average eye, and even the trained ones.

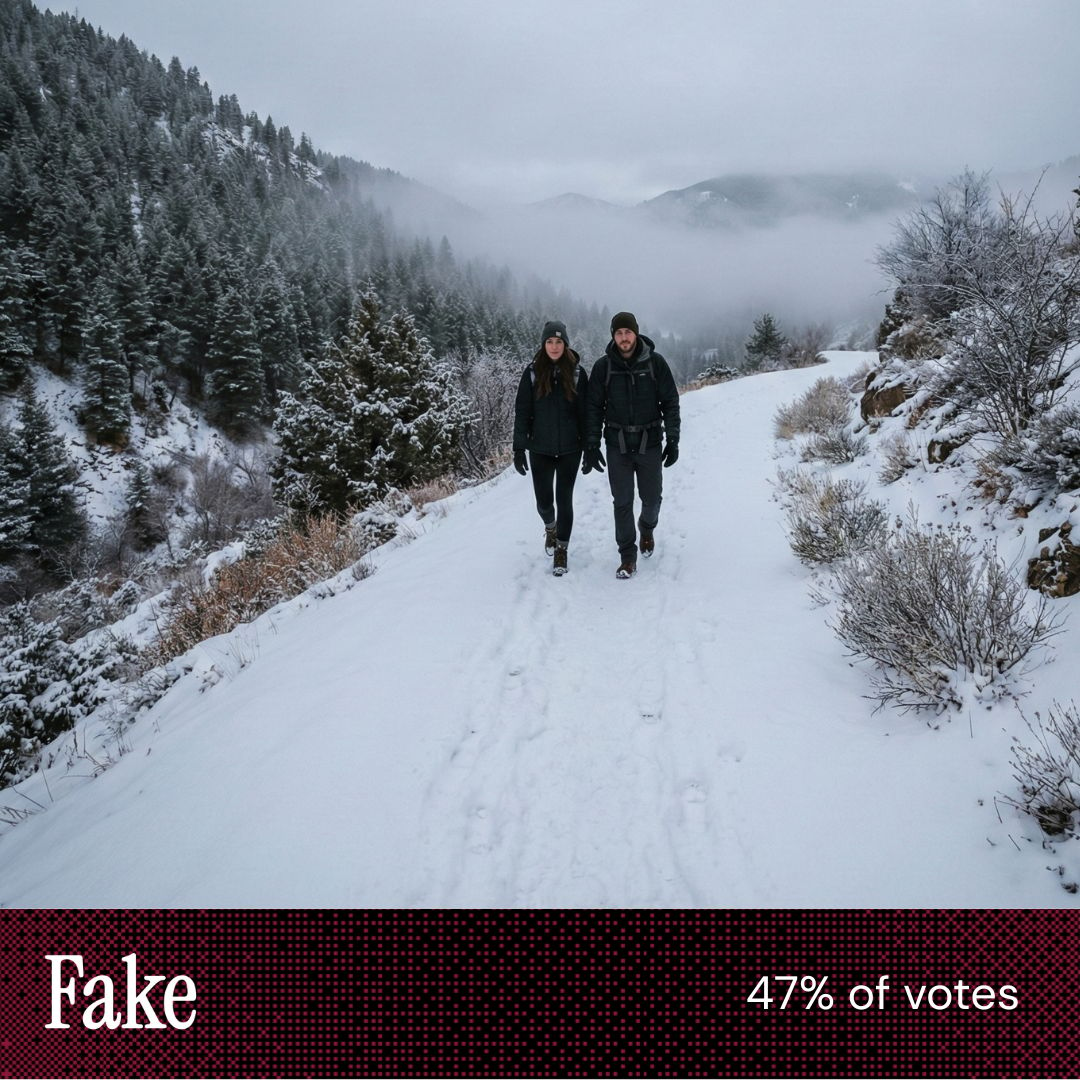

According to a Pew Research study, 53% of Americans aren’t confident in their ability to detect when something is AI-generated. And The Deep View’s data proves that, too. We run our “AI or Not” game at the bottom of our newsletter every day, and our audience of AI professionals can only identify the real image compared to the AI image about 50% of the time — basically a coin flip.

“Over time, particularly as the models get better, the ability on a one-shot basis to be able to detect if something is AI or not is going to get worse,” Ben Nye, director of learning science at the University of Southern California, told The Deep View. “That’s basically inevitable.”

And a number of problems can spring from the fact that people are only getting worse at distinguishing real from fake. There’s the implication of the annihilation of jobs for artists and creators, with the tech having been widely protested and hotly debated in Hollywood over the past year. Higher quality fake media also means higher quality disinformation. AI-generated content is already being used for political disinformation campaigns, and fake evidence is starting to weave its way into court cases.

For the average consumer of media, figuring out who to trust is only going to get more difficult, and the old adage “don’t believe everything you see online” will only ring more true. And it may force consumers to alter their relationship with media entirely, said Nye, going back to creators, sources and artists they believe they can trust to be authentic.

“They may want to have more longitudinal relationships with who they’re consuming media from,” said Nye. “That used to be more of the case … but now we have this very recommendation-heavy tech environment.”

While we haven’t reached a point of such saturation just yet, authenticity is only becoming rarer, and sources you can trust are becoming more valuable. Enjoy your slop while it’s still sloppy, because eventually, the AI models are going to figure out the right number of fingers people are meant to have. It's going to get even more difficult to sort out the real deal from the fake stuff in 2026.

LINKS

Trump Administration to impose tariffs on Chinese chip imports in mid-2027

ServiceNow to acquire cybersecurity startup Armis for $7.75 billion

Samsung to acquire ZF’s driver assistance unit for more than $1.5 billion

Nigeria, Google in talks to build out undersea cable

MiniMax M2.1: A new model for complex, real-world tasks, featuring “significantly enhanced” multi-language programming.

Lemon Slice-2: A diffusion model that adds digital avatars to agents.

Vibe Pocket: A cloud-based platform for running agents like Claude Code, Codex, opencode on mobile devices and web.

OpenAI: Researcher, Robustness & Safety Training

Amazon: Applied Scientist, LLM Code Agents, Kiro Science

Riot Games: Principal Enterprise AI Engineer

eBay: Applied Researcher 2 - Search Ranking

A QUICK POLL BEFORE YOU GO

Where do you stand on AI slop? |

The Deep View is written by Nat Rubio-Licht, Jason Hiner, Faris Kojok and The Deep View crew. Please reply with any feedback.

Thanks for reading today’s edition of The Deep View! We’ll see you in the next one.

“The lighting and the snow [look more real in this image].” |

“Looked like in [this image] they are balancing on one foot rather than walking.” |

Take The Deep View with you on the go! We’ve got exclusive, in-depth interviews for you on The Deep View: Conversations podcast every Tuesday morning.

If you want to get in front of an audience of 450,000+ developers, business leaders and tech enthusiasts, get in touch with us here.